Wearable monitoring is likely to play a key role in the future of health care. In many cases, wearable devices may monitor our physiological signals that can indicate mental states, such as emotions.

The lab of Rose Faghih has been developing a system called MINDWATCH, algorithms and methods for wearable sensors that collect information from electrical signals in the skin to make inferences about mental activity. While their lab has been successful in translating these physiological signals quickly and effectively, they didn’t incorporate direct feedback from the individual’s subjective experiences.

Now, the researchers are incorporating feedback and labels from the users, enhanced with machine-learning, and combining it with their existing model for a fuller, more accurate picture of mental states.

The research is published in the journal IEEE Transactions on Biomedical Engineering.

At a high level, the human body can be viewed as a complex dynamical system. It is a complex conglomeration of control systems that each works in turn to manage different variables or states. Unfortunately, a number of the states researchers are interested in—particularly the ones that are more abstract—cannot be measured directly. These include states of emotion, cognition and consciousness.

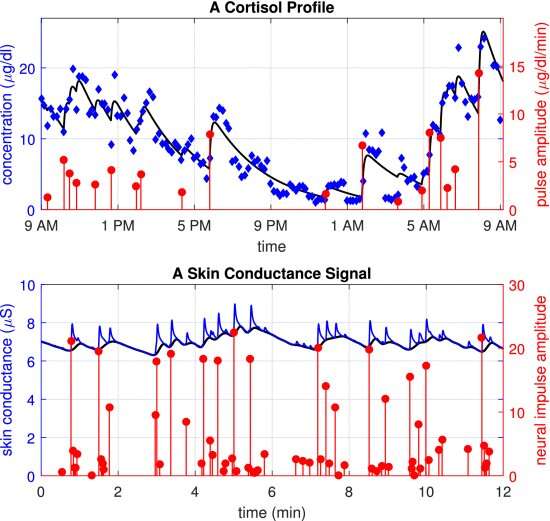

Nevertheless, changes in these unobserved states do give rise to corresponding changes in different physiological signals that can be measured more easily. For instance, we may not be able to directly observe or measure a person’s emotional state, but we can measure subtle changes in a person’s heart rate, breathing or sweat secretions (which in turn affects the conductivity of the skin). These signals can then be used to estimate the states we wish to.

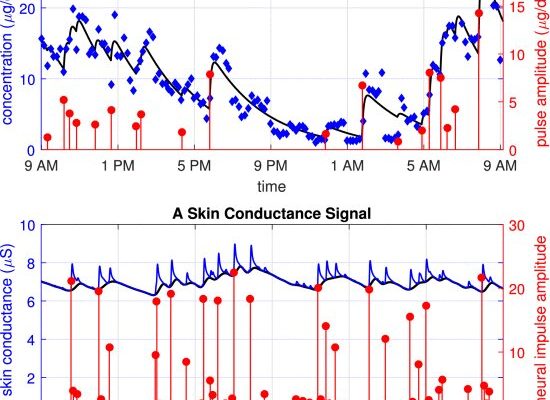

However, the signals researchers use to estimate these unobserved states are “spikey” or “pulsatile” in nature. These spikey signals can be used to estimate the various states of the human body and brain without direct observation. With already-existing methods, you could obtain state estimates, but still wouldn’t have any means to have those estimates agree with the more direct state-related information in possession. This is especially important in experiments involving human subjects where the subjects can indeed provide information related to the unobserved state. This type of more-direct state-related information can be called a “label.”

Incorporating direct feedback from users offers information that can’t be gleaned from biological data alone. For instance, a person with PTSD could have their skin conductance continuously monitored to provide an emotion estimate, but ideally, the final estimate should rely both on these signals and information perhaps obtained on rating scales or through regular questionnaires. It is likewise the case for patients with hormone disorders. Hormone measurements do provide valuable information, but should likely be combined with personal feedback (e.g. regarding feelings of energy/lethargy) to obtain a single complete picture. The authors met this need through a proof-of-principle work on a hybrid type of estimator.

Performing estimation on some data such that what is predicted agrees with available labels falls within the domain of supervised machine learning. This work adapted an existing neural network method for state estimation by adding a penalization term for not agreeing with the labels to enable a hybrid estimator. The proposed hybrid estimator was utilized to determine an aspect of emotion tied to changes in skin conductance (through changes in sweat secretions) and to determine energy states within the body based on pulsatile hormone secretions.

A wearable monitoring system that incorporates verbal feedback from the user with physiological signals for hybrid estimation can eventually provide a more complete picture of the user to eventually provide more comprehensive closed-loop care.

More information:

Dilranjan S. Wickramasuriya et al, Hybrid Decoders for Marked Point Process Observations and External Influences, IEEE Transactions on Biomedical Engineering (2022). DOI: 10.1109/TBME.2022.3191243

Journal information:

IEEE Transactions on Biomedical Engineering

Source: Read Full Article